Irrational Exuberance for 04/10/2019

Hi folks,

This is the weekly digest for my blog, Irrational Exuberance, and these are my posts from the last week. Always grateful to hear your thoughts and suggestions for topics to write about, drop me a tweet or direct message over on Twitter at @lethain.

Read posts on the blog:

-

To innovate, first deprecate.

-

Magnitudes of exploration.

To innovate, first deprecate.

Building on Magnitudes of exploration, which discusses an approach to balancing platform standardization and exploring for new, superior platforms, I wanted to also look into this problem from a systems thinking perspective, similar to my previous examination of Why limiting work-in-progress works.

Supporting Innovation notebook is on Github and Binder.

Standardization

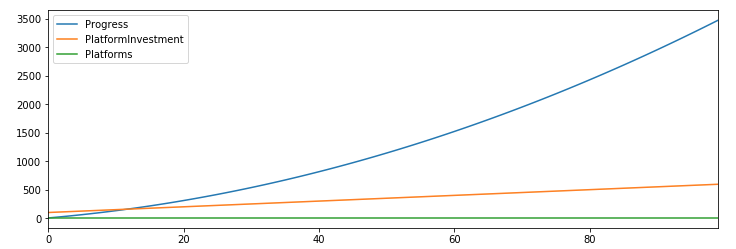

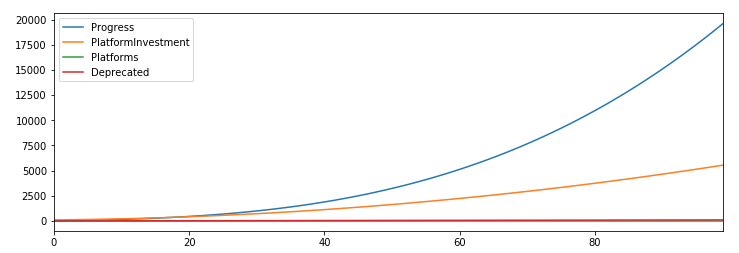

Starting our we model a company that doesn't adopt new platforms, instead choosing to place their entire investment budget into their existing platforms.

PlatformInvestment(100)

Platforms(10)

[Potential] > Platforms @ 0

[Potential] > PlatformInvestment @ 5

[Potential] > Progress @ PlatformInvestment / Platforms

As their platforms over time, they get faster and faster over time.

It's not aflashy path to success, but it is a path to success.

Exploring a bit

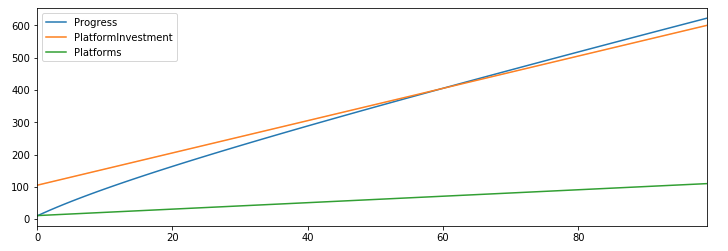

Changing things up, let's consider an organization that adds more platforms over time, splitting their investment across those platforms.

PlatformInvestment(100)

Platforms(10)

[Potential] > Platforms @ 1

[Potential] > PlatformInvestment @ 5

[Potential] > Progress @ PlatformInvestment / Platforms

Hmm, I have a bad feeling about this one.

Comparing this approach with the standardization one above, things don't work out very well for us, with this naive approach to exploration causing us to spread our investment further and further over time.

Certainly this experience might resonates to some folks, but it's also a bit unrealistic. Who keeps every platform they've ever considered using?

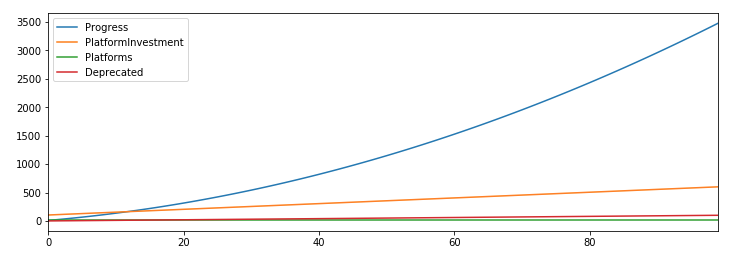

Deprecation

So let's factor in deprecation, getting rid of old platforms as we add in new ones.

PlatformInvestment(100)

Platforms(10)

[Potential] > Platforms @ 1

Platforms > Deprecated @ 1

[Potential] > PlatformInvestment @ 5

[Potential] > Progress @ PlatformInvestment / Platforms

Now we're exactly back to the results we got from standardization, albeit with a bit more complexity.

That said, we hopefully not adopting platforms which are in every way equivalent to our previous platforms.

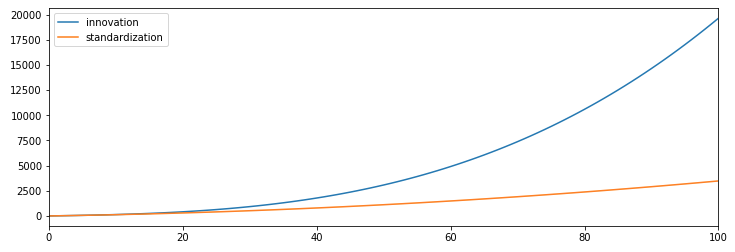

Innovation

Now let's factor in the idea of innovation, which is to say that we're being careful to only adoption new platforms that are actually better than our existing platforms in some way

PlatformInvestment(100)

Platforms(10)

InvestmentRate(5)

[Potential] > Platforms @ 1

Platforms > Deprecated @ 1

[Potential] > InvestmentRate @ 1

[Potential] > PlatformInvestment @ InvestmentRate

[Potential] > Progress @ PlatformInvestment / Platforms

and things are starting to look good for us

If we compare these approaches, one which focuses on standardization against another which innovates–adding better platforms over time–the winning approach is clear.

However, note that we're being very rigorous about deprecating existing platforms at the rate that we're adopting them, to avoid spreading our investment across more tools.

Hmm, that's a bit optimistic.

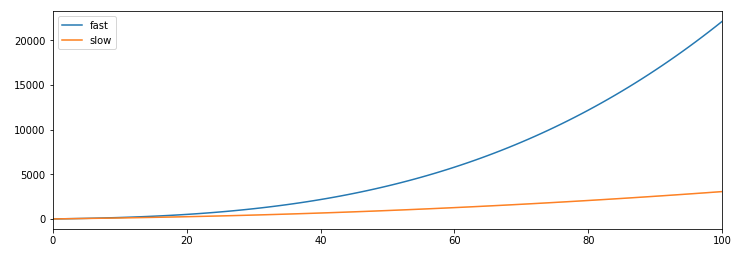

Deprecation rate

What if, as is often the case in companies with a decentralized approach to adopting new technologies, we find ourselves adopting better technologies–we're still innovating–but get less rigorous about deprecation?

Let's compare two models here, the first which has us introducing and deprecating at the same rate:

PlatformInvestment(150)

Platforms(10)

InvestmentRate(15)

[Potential] > Platforms @ 3

Platforms > Deprecated @ 3

[Potential] > InvestmentRate @ 1

[Potential] > PlatformInvestment @ InvestmentRate

[Potential] > Progress @ PlatformInvestment / Platforms

The second model is identical but only deprecates two platform each round, instead of quite matching the three new introduced platforms.

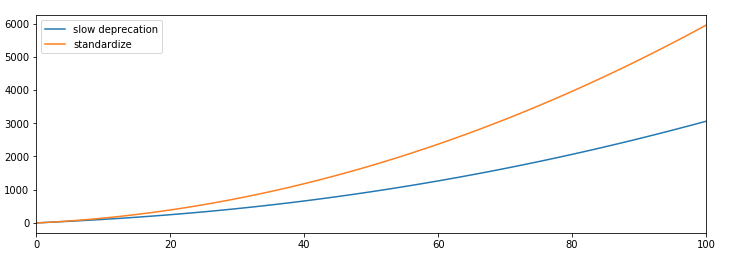

We can see that deprecating early is essential to gaining the largest upside of explorations, even if every tool you adopt is superior to your previous selections. Even more damningly, let's compare standardization against slow deprecation.

I think this is a startling result! If we aren't mature about deprecating old technologies when we adopt new ones, then it's better to skip the whole exploration bit and standardize on what we already have.

Back in reality

Looking at these results, note that these are results over one hundred rounds, and assuming we can explore and deprecate technologies over a single round. For most companies, that would mean a round is at least six months, and potentially much longer.

There is still something important to learn from this exercise: : if you want to become a truly innovative company, the two fundamental skills are determining if a new technology is significantly superior to existing ones, and deprecating old technology.

Magnitudes of exploration.

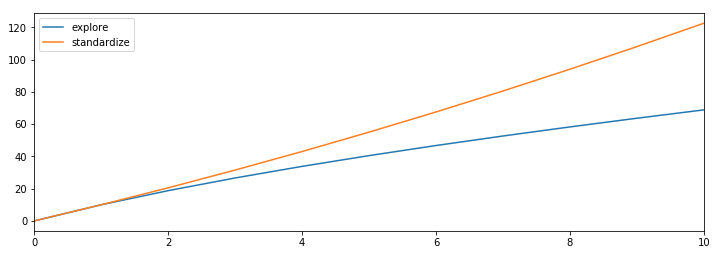

Mostly standardize, exploration should drive order of magnitude improvement, and limit concurrent explorations.

Standardizing technology is a powerful way to create leverage: improve tooling a bit and every engineer will get more productive. Adopting superior technology is, in the long run, an even more powerful force, with successes compounding over time. The tradeoffs and timing between standardizing on what works, exploring for superior technology, and supporting adoption of superior technology are at the core of engineering strategy.

An effective approach is to prioritize standardization, while explicitly pursuing a bounded number of explorations that are pre-validated to offer a minimum of an order of magnitude improvement over the current standard.

Much thanks to my esteemed colleague Qi Jin who has spent quite a few hours discussing this topic with me recently.

Standardization

Standardization is focusing on a handful of technologies and adapting your approaches to fit within those technologies' capabilities and constraints.

Fewer technologies support deeper investment in each, and reduce the cost of ensuring clean integration. These narrowness simplifies the process of writing great documenting, running effective training, providing excellent tooling, etc.

The benefits are even more obvious when operating technology in production and at scale. Reliability is the byproduct of repeated, focused learning–often in the form of incidents and incident remediation–and running at significant scale requires major investment in tooling, training, preparation and practice.

Importantly, improvements to an existing standard are the only investments an organization can make that don't create organizational risk. Investing into new technologies creates unknowns that the organization must invest into understanding; investing more into the existing standards is at worst neutral. (This ignores opportunity cost, more there in a moment.)

I am unaware of any successful technology company that doesn't rely heavily on a relatively small set of standardized technologies, the combination of high leverage and low risk the approach offers is fairly unique.

Exploration

Technology exploration is experimenting with a new approach or technology, with clear criteria for adoption beyond the experiment.

Applying its adoption criteria, an exploration either proceeds to adoption or halts with a trove of data:

proceeds - the new technology is proven out, leading to a migration from the previous approach to the new one.

halts - the new technology does not live up to its promise, and the experiment is fully deprecated.

This definition is deliberately quite constrained in order to focus the discussion on the value of effective exploration. It's easy to prove the case that poorly run exploration leads to technical debt and organizational dysfunctional–just as easy as proving a similar case for poorly run standardization efforts.

The fundamental value of exploration is that your current tools have brought you close to some local maxima, but you're very far from the global maxima. Even if you're near the global maxima today, it'll keep moving over time, and trending towards it requires ongoing investment.

Each successful exploration slightly accelerates your overall productivity. The first doesn't change things too much, and neither does the second, but as you continue to successfully complete explorations, their technical leverage begins to compound, slowly, subtlely becoming the most powerful creator of technical leverage.

Tension

While both standardization and exploration are quite powerful, they are often at odds between each other. You reap few benefits of standardization if you're continually exploring, and rigid standardization freezes out the benefits of exploration.

On internal engineering practices at Amazon describes the results of over-standardization: once novel tooling struggles to keep up with the broader ecosystem's reinvention and evolution, leading to poor developer experiences, weak tooling, and ample frustration. (A quick caveat that I'm certain the post doesn't accurately reflect all of Amazons' development experience, a company that large has many different lived experiences depending on team, role and perspective.)

If over-standardization is an evolutionary dead end, leaving you stranded on top of one local maxima with no means to progress towards the global maxima, a predominance of exploration doesn't work particularly well either.

In the first five years of your software career, you're effectively guaranteed to encounter an engineer or a team who refuse to use the standardized tooling and instead introduce a parallel stack. The initial results are wonderful, but finishing the work and then transitioning into operating that technology in production gets harder. This is often compounded by the folks introducing the technology getting frustrated by having to operate it, and looking to hand off the overhead they've introduced to another team. If that fails, they often leave the team or the company, implicitly offloading the overhead.

Balancing these two approaches is essential. Every successful technology company imposes varying degrees of control on introducing new technologies, and every successful technology company has mechanisms for continuing to introduce new ones.

An order of magnitude improvement

I've struggled for some time to articulate the right trade off here, but in a recent conversation with my coworker Qi Jin, he suggested a rule of thumb that resonates deeply.

Standardization is so powerful that we should default to consolidating on few platforms, and invest heavily into their success. However, we should pursue explorations that offers at least one order of magnitude improvement over the existing technology.

This improvement shouldn't improve on a single dimension with meaningful regressions across other aspects, but instead it should approximately as strong on all dimensions and at least one dimension must show at least one order of magnitude improvement.

A few possible examples, although they'd all require significant evidence to prove the improvement:

- moving to a storage engine that is about as fast and as expressive, but is ten times cheaper to operate,

- changing from a batch compute model like Hadoop to use a streaming computation model like Flink, enabling the move from a daily cadence of data to a real-time cadence (assuming you can keep the operational complexity and costs relatively constant),

- moving from engineers developing on their laptops and spending days debugging issues to working on VMs which they can instantly reprovision from scratch when the encounter an environment problem.

The most valuable—and unsurprisingly the hardest—part is quantifying the improvement, and agreeing about that the baselines haven't degraded. It's quite challenging to compare the perfect vision of something non-existent with the reality of something flawed but real, which is why explorations are essential to pull both into reality for a measured evaluation.

Limit work-in-progress

Reflecting on my experience with technology change, I believe that introducing one additional constraint into the "order of magnitude improvement" rule makes it even more useful: maintain a fixed limit the number of ongoing explorations that can be occurring at any given time.

By constraining the explorations we simultaneously pursue to a small number—-perhaps two or three for a thousand person engineering organization—-we manage the risk of technical debt accretion, which protects our our ability to continue making explorations over time. Many companies don't constrain exploration, and quickly find themselves in a position where they simply can't risk making any more.

That's a rough state to remain in.

Being able to continue making discoveries is essential, because the true power of exploration is not the first step, but the "nth plus one" step, compounding leverage all along the way.

There isn't a single rule or approach that captures the full complexities of every situation, but a solid default approach can take you a surprisingly long way.

I've been in quite a few iterations of "standardize or explore" strategy discussions, and this is the best articulation I've found so far of what works in practice: mostly standardize, explorations should drive order of magnitude improvement, and limit concurrent explorations.

That's all for now! Hope to hear your thoughts on Twitter at @lethain!

|